Video Processing - GStreamer

Introduction

Multimedia processing usually involves several tasks like data encoding and decoding, media containers multiplexing and demultiplexing, and network handshakes. That becomes a challenging task when you have to care about hardware capabilities, display specifications, and so on. GStreamer is a framework that provides plugins that automate many of those tasks and facilitate the development of multimedia applications.

This article presents the main concepts and use cases of using GStreamer on embedded Linux devices. The GStreamer framework supports different programming languages. For simplicity, this article provides only command line examples. You can, however, use those concepts across any programming language.

The following topics are covered:

- GStreamer Pipelines

- Command Line Tools

- Video Playback

- Video Decoding

- Video Encoding

- Debugging Tools

- Additional Resources

GStreamer Pipelines

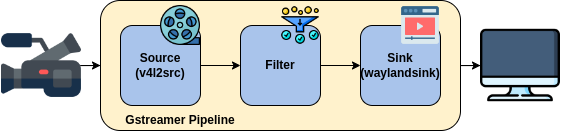

GStreamer is a framework based on a pipeline architecture. That means it handles video and audio data by linking elements together. Each element typically performs a specific function, such as video encoding, decoding, streaming or playback. When using GStreamer, developers' tasks involve selecting the necessary elements and configuring the data flow between them.

Data flows from one element to another through pads, which consist of inputs and outputs with specific capabilities. For example, pads can restrict the video format processed by an element. The two existing types of pads are the following:

- Sink pad: through which an element gets incoming data.

- Source pad: through which data goes from one element to another.

For elements to be linked together, they must share the same pad capabilities. Examples of capabilities are RGB format, resolution and framerate.

For more information, see GStreamer foundations and GStreamer Table of Concepts.

Command Line Tools

GStreamer command line tools enable quick prototyping, testing, and debugging of pipelines. The commands worth highlighting are gst-launch-1.0 and

gst-inspect-1.0.

gst-launch-1.0

The gst-launch-1.0 command builds and runs basic GStreamer pipelines. It follows the structure below:

# gst-launch-1.0 <pipeline>

For example, we can take the videotestsrc element and connect it directly to the waylandsink one:

# gst-launch-1.0 videotestsrc ! waylandsink

Note that elements are separated by exclamation marks !.

We can specify the type of data that flows between elements with pad capabilities. In the following pipeline, video/x-raw indicates that videotestsrc outputs raw video. With width=1920, height=1080, framerate=60/1 we change the video resolution and framerate:

# gst-launch-1.0 videotestsrc ! video/x-raw, width=1920, height=1080, framerate=60/1 ! waylandsink

gst-launch-1.0 is designed for testing and debugging purposes. You should not build your application on top of it.

gst-inspect-1.0

The gst-inspect-1.0 command displays information on available GStreamer plugins or information about a particular plugin or element.

# gst-inspect-1.0 <plugin/element>

The gst-inspect-1.0 command can be a helpful tool when developing GStreamer pipelines. You can use it to see element descriptions, configurations and pad properties (supported resolutions, formats and framerates).

The following command displays information about the videotestsrc element.

# gst-inspect-1.0 videotestsrc

With no arguments, the command displays a list of all available elements and plugins. You can use that, for instance, to find elements that handle H.264 videos, as follows:

# gst-inspect-1.0 | grep h264

Video Playback

Multimedia playback involves getting a media file from a source element and consuming it with a sink element. In the following pipeline, raw video goes from videotestsrc to waylandsink, which then sends the data to a Wayland display server. On Toradex modules, the Weston Compositor displays that data in a window.

# gst-launch-1.0 videotestsrc ! waylandsink

The problem is that media files, known as containers, usually contain multiple media streams, such as video, audio and subtitles. That means they may require different elements to separate those streams (demultiplexing), process them (decoding) and send them to a compositor in the correct format. At first, it may look hard to know what elements to use, and how to configure and connect them. Luckily, GStreamer provides the playbin element, which acts as a source and sink and automatically selects the necessary elements to reproduce media.

The following command creates a pipeline with the gst-launch-1.0 command:

# gst-launch-1.0 playbin uri=file://<video file>

The playbin element automates the whole pipeline creation. To check what elements were used by playbin, see the Debugging section.

Alternatively, you can use the gst-play-1.0 command-line tool, which is built on top of the playbin element:

# gst-play-1.0 <video file>

Video Encoding

Encoding refers to the process of converting raw video/image to a more compact format. It is commonly applied before storing data or sending video over the internet.

The following example uses gst-launch-1.0 to create a pipeline that encodes the videotestsrc and stores it as videotestsrc.mp4:

# gst-launch-1.0 videotestsrc num-buffers=300 ! video/x-raw, width=1920, height=1080, framerate=60/1 ! videoconvert ! vpuenc_h264 ! video/x-h264 ! h264parse ! qtmux ! filesink location=videotestsrc.mp4

Going into detail about each element of the above pipeline:

- videotestsrc: Produces video data in different formats. With the

num-buffersproperty, we can determine how many frames are produced. Thevideo/x-raw, width=1280, heigth=720, framerate=30/1pad controls the format, resolution and framerate of the video stream ofvideotestsrc. - videoconvert: Ensures compatibility between the

videotestsrcsource and thex264encsink pads regarding color format, for example. - vpuenc_h264: Compresses the video to the H.264 standard (video encoding) with VPU acceleration. The

video/x-h264pad configures the format of this element's outputs (source), ensuring the next element will get the file in the correct compression standard. - h264parse: Parses the incoming data and produces streams that the muxing element (

mp4mux) can handle. - mp4mux: Muxing element that creates media containers. It merges media streams into a

.mp4file. - filesink: Writes incoming data to a file in the local file system.

You can also use the encodebin element, which automatically creates an encoding pipeline, as follows:

# gst-launch-1.0 videotestsrc num-buffers=300 ! video/x-raw, width=1920, height=1080, framerate=60/1 ! encodebin ! filesink location=videotestsrc.mp4

You can use the automatically generated pipeline as a base for creating your own. To see a graph with the elements of the pipeline, check the Debugging Tools section.

Video Decoding

Decoding refers to the process of uncompressing and converting the encoded media to a raw format or, in other words, a format that can be displayed on a screen.

The following example uses gst-launch-1.0 to create a pipeline that decodes a multimedia file and sends it to waylandsink, which displays the video on the Weston Compositor:

# gst-launch-1.0 filesrc location=<encoded_file_location> ! qtdemux ! h264parse ! vpudec ! waylandsink

Going into detail about each element of the above pipeline:

- filesrc: Reads data from a local file.

- qtdemux: Splits (demuxes) QuickTime containers into its distinct streams, such as audio and video.

- h264parse: Parses the incoming data and produces streams that the demuxing element (

vpudec) can handle. - vpudec: Decodes the H.264 video stream into raw video frames with VPI acceleration.

- waylandsink: Displays raw video frames on a Wayland display server (Weston).

You can also use the decodebin element, which automatically creates a decoding pipeline, as follows:

# gst-launch-1.0 filesrc location=<encoded_file_location> ! decodebin ! waylandsink

Since the playbin element and the gst-play-1.0 command create playback pipelines, they also select the needed demultiplexing and decoding elements.

You can then use the automatically generated pipeline as a base for creating your own. To see a graph with the elements of the generated pipeline, check the Debugging section.

Video Capturing

On embedded Linux, video capture devices, including CSI cameras, webcams, and video cards, are usually managed by the Video4Linux driver. GStreamer provides plugins that implement the V4L API, enabling a simple way of integrating those devices into pipelines. You can, for example, get camera data and display it on a window with the following pipeline:

# gst-launch-1.0 v4l2src device='/dev/video3' ! "video/x-raw, format=YUY2, framerate=5/1, width=640, height=480" ! waylandsink

Note that the v4l2src element is responsible for getting the video data, moving the complexity away.

For details on using cameras on Toradex modules, see Cameras Use Cases.

Debugging Tools

GStreamer provides multiple tools that facilitate pipeline debugging. It is possible to retrieve different levels of information about errors and graphs of the built pipelines.

This section shows useful tips for debugging GStreamer pipelines.

Warning and Error Messages

The GST_DEBUG environment variable controls the level of debugging outputs. For example, with the following command, GStreamer pipelines start to display error and warning messages:

export GST_DEBUG=2

The gst-inpect-1.0 command is a handy command for getting details about GStreamer elements and plugins.

For more information about debugging levels, see GStreamer Debugging tools/level.

Pipeline Graphs

GStreamer allows us to get a graph of the created pipelines. You can use the encodebin, decodebin and playbin elements to create pipelines and check what specific elements were used. That can be a nice guide for you to develop your own pipelines.

To get pipeline graphs, set the GST_DEBUG_DUMP_DOT_DIR environment variable to a path of your choice. GStreamer will use that path to save graphs in the .dot format.

You can convert .dot graphs to .png with the graphviz CLI, as follows:

dot -Tpng -o<output_file_name.png> <.dot_file>

For more information, see Getting pipeline graphs and Graphviz CLI.

Pipelines may generate multiple .dot files. If you can not get a graph with a specific file, try out different ones.

Additional Resources

Check the following articles for information on different use cases:

Operating Systems

- How to use GStreamer on Torizon OS: Contains information on handling and displaying video data with GStreamer within container environments. It also provides pipeline examples for different SoMs, including those that support hardware acceleration with VPU.

Cameras

- Cameras on Toradex System on Modules: An overview of the cameras supported by Todarex hardware and software.

- How to use Cameras on Torizon OS: Describes how to identify capture devices on Torizon OS, access cameras from within containers and handle camera data with GStreamer.

- First Steps with CSI Camera Set 5MP AR0521 Color (Torizon): A hands-on guide that shows how to connect cameras, enable Yocto layers and handle camera data with GStreamer on Torizon OS.

- First Steps with CSI Camera Set 5MP AR0521 Color (Linux): A hands-on guide that shows how to connect cameras, enable Yocto layers and handle camera data with GStreamer on Toradex BSPs.

Machine learning

- Torizon Sample: Real-Time Object Detection with Tensorflow Lite: Contains steps for running an object detection application with TensorFlow Lite on Torizon OS. In that application, GStreamer is used to handle video frames that serve as input to a Machine Learning model.

Audio

- Audio (Linux): Describes using the ALSA driver and GStreamer to record and play audio on embedded Linux.