How to use Cameras on Torizon

Introduction

This article aims to provide helpful information for the first steps with Cameras on Torizon OS. So, it means that this article is going to guide you with the specific details and considerations that surround the usage of a video capture device using containers.

The main goal of using Torizon and containers is to simplify the developer's life and keep the development model close to one of desktop or server applications. However, some requirements inherent to embedded systems development may be considered in the process. Therefore, running a video capture application inside a container requires some aspects like hardware access, running multiple containers, and others. You can read more about these requirements in the section Container Requirements

If you want to learn more about the Torizon best practices and container development workflow for embedded systems applications, you can refer to Torizon Best Practices Guide. Developing applications for Torizon can be done using command-line tools or, as we recommend, the Visual Studio Code Extension for Torizon.

This article workflow is valid for USB Cameras with USB Video Class (UVC) standard driver and some CSI cameras. We recommend using one of our supported CSI cameras, which you can find at Supported Cameras. The process described here regarding the use of a MIPI-CSI camera was done with the CSI Camera Set 5MP AR0521 Color. To use the AR0521 camera you have to enable a Device-Tree Overlay. See more at First Steps with CSI Camera Set 5MP AR0521 Color (Torizon).

To interact with video capturing devices, we will use utilities provided by Video4Linux and GStreamer. For more information about these tools, check the following articles:

This article complies with the Typographic Conventions for the Toradex Documentation.

Prerequisites

- A Toradex SoM with Torizon installed and running.

- Basic knowledge of GStreamer. You can refer to How to use Gstreamer on Torizon OS and Video Processing - GStreamer.

- (Optional) A camera to follow the instructions.

Camera usage with containers

Running your application in a container means that it will run in a "sandboxed" environment. This fact may limit its interaction with other components in the system. Hardware access and the use of multiple containers, mainly focused on the usage of a Graphical User Interface (GUI), are the most important topics that you must know to have a good understanding of how to proceed with camera usage. You can read more about multiple containers at Using Multiple Containers with Torizon OS.

Hardware Access and Shared Resources

Docker provides ways to access specific hardware devices from inside a container, and often one needs to grant access from both a permission and a namespace perspective.

- Permissions: To grant GPU access to the container, as explained in Torizon Best Practices Guide, you have to enable the cgroup rule

c 226:* rmwand, on iMX8 SoM,c 199:* rmw. - Namespace: To add the camera device to the container you can use bind mounts, but passing devices (using

--device) is better for security since you avoid exposing a storage device that may be erased by an attacker.

To use camera devices, it's required to bind mount specific directories of the host machine. In other words, you have to guarantee access to certain directories. So, you have to use four bind mounts:

/dev: Mount/devallows access to the devices that are attached to the local system./tmp: Mounting/tmpas a volume in the container allows your application to interact with Weston (GUI)./sys: Grant access to kernel subsystems./var/run/dbus: Provides access to system services.

Another useful resource is displaying the camera output, especially during the setup and debugging phases of the application development, even if not necessary for the final application. To perform this, you can use a display by configuring a Weston-based container, or VNC. To read more about GUI in Torizon OS, refer to the section Graphical User Interface (GUI) in Torizon Best Practices Guide.

Dockerfile

The implementation details will be explained in this session. See the Quickstart Guide with the instructions about how to compile the image on a host pc and pull the image onto the board. You can also scp this file to the board and build it locally.

Building the Container Image

Make sure you have configured your Build Environment for Torizon Containers.

Now it's a good time to use torizon-samples repository or download the sample Dockerfile:

$ cd ~

$ git clone --branch bookworm https://github.com/toradex/torizon-samples.git

$ cd ~/torizon-samples/gstreamer/bash/simple-pipeline

And build the container image:

$ docker build --build-arg BASE_NAME=wayland-base-vivante --build-arg IMAGE_ARCH=linux/arm64/v8 -t <your-dockerhub-username>/gst_example .

Now it's a good time to use torizon-samples repository or download the sample Dockerfile:

$ cd ~

$ git clone --branch bookworm https://github.com/toradex/torizon-samples.git

$ cd ~/torizon-samples/gstreamer/bash/simple-pipeline

And build the container image:

$ docker build -t <your-dockerhub-username>/gst_example .

Now it's a good time to use torizon-samples repository or download the sample Dockerfile:

$ cd ~

$ git clone --branch bookworm https://github.com/toradex/torizon-samples.git

$ cd ~/torizon-samples/gstreamer/bash/simple-pipeline

And build the container image:

$ docker build --build-arg BASE_NAME=wayland-base-am62 --build-arg IMAGE_ARCH=linux/arm64/v8 --build-arg IMAGE_TAG=3 -t <your-dockerhub-username>/gst_example .

After the build, push the image to your Dockerhub account:

$ docker push <your-dockerhub-username>/gst_example

For more details about the dockerfile, refer to How to use Gstreamer on Torizon OS.

Hello world with a GStreamer pipeline

To run a GStreamer pipeline inside a container, in order to avoid possible problems in launching the containers that are required to start the video, make sure to stop all the other containers that might be running on your device.

# docker stop $(docker ps -a -q)

Launching Weston Container

Considering that a streaming video application requires a GUI, you have to pull a Debian Bookworm container featuring the Weston Wayland compositor and start it on the module.

# docker container run -d --name=weston --net=host \

--cap-add CAP_SYS_TTY_CONFIG \

-v /dev:/dev -v /tmp:/tmp -v /run/udev/:/run/udev/ \

--device-cgroup-rule="c 4:* rmw" --device-cgroup-rule="c 253:* rmw" \

--device-cgroup-rule="c 13:* rmw" --device-cgroup-rule="c 226:* rmw" \

--device-cgroup-rule="c 10:223 rmw" --device-cgroup-rule="c 199:0 rmw" \

--device-cgroup-rule="c 10:* rmw" \

torizon/weston-imx95:4 \

--developer

# docker container run -d --name=weston --net=host \

--cap-add CAP_SYS_TTY_CONFIG \

-v /dev:/dev -v /tmp:/tmp -v /run/udev/:/run/udev/ \

--device-cgroup-rule="c 4:* rmw" --device-cgroup-rule="c 253:* rmw" \

--device-cgroup-rule="c 13:* rmw" --device-cgroup-rule="c 226:* rmw" \

--device-cgroup-rule="c 10:223 rmw" --device-cgroup-rule="c 199:0 rmw" \

torizon/weston-imx8:4 \

--developer

# docker container run -d --name=weston --net=host \

--cap-add CAP_SYS_TTY_CONFIG -v /dev:/dev -v /tmp:/tmp \

-v /run/udev/:/run/udev/ \

--device-cgroup-rule="c 4:* rmw" --device-cgroup-rule="c 13:* rmw" \

--device-cgroup-rule="c 226:* rmw" --device-cgroup-rule="c 10:223 rmw" \

torizon/weston-am62:4 --developer

# docker container run -d --name=weston --net=host \

--cap-add CAP_SYS_TTY_CONFIG -v /dev:/dev -v /tmp:/tmp \

-v /run/udev/:/run/udev/ \

--device-cgroup-rule="c 4:* rmw" --device-cgroup-rule="c 13:* rmw" \

--device-cgroup-rule="c 226:* rmw" --device-cgroup-rule="c 10:223 rmw" \

torizon/weston-am62p:4 --developer

# docker container run -d --name=weston --net=host \

--cap-add CAP_SYS_TTY_CONFIG -v /dev:/dev -v /tmp:/tmp \

-v /run/udev/:/run/udev/ --device-cgroup-rule="c 4:* rmw" \

--device-cgroup-rule="c 13:* rmw" --device-cgroup-rule="c 226:* rmw" \

--device-cgroup-rule="c 10:223 rmw" \

torizon/weston:4 \

--developer

To learn more about the device_cgroup_rules section of the docker-compose file above, please refer to the Hardware Access through Control Group Rules (cgroup) section of the Torizon Best Practices Guide

Discovering the Video Capture Device

If it is the first time you are dealing with a specific video device and you're not sure which device is the capture one, you may follow the next steps to discover what is the right video capture device:

- Still outside the container, list the video devices by using

ls /dev/video*.

# ls /dev/video*

/dev/video0 /dev/video1 /dev/video2 /dev/video3 /dev/video12 /dev/video13

- Start the previously built container by passing all the video devices under the flag

--devices.

# docker run --rm -it -v /tmp:/tmp -v /var/run/dbus:/var/run/dbus -v /dev:/dev -v /sys:/sys \

--device /dev/video0 --device /dev/video1 --device /dev/video2 --device /dev/video3 --device /dev/video12 --device /dev/video13 \

--device-cgroup-rule='c 226:* rmw' --device-cgroup-rule='c 199:* rmw' \

<your-dockerhub-username>/<Dockerfile-name>

- Once inside the container, use the command

v4l2-ctl --list-devices. Now you are able to see which devices are video capture ones.

## v4l2-ctl --list-devices

vpu B0 (platform:):

/dev/video12

/dev/video13

mxc-jpeg decoder (platform:58400000.jpegdec):

/dev/video0

mxc-jpeg decoder (platform:58450000.jpegenc):

/dev/video1

Video Capture 5 (usb-xhci-cdns3-1.2):

/dev/video2

/dev/video3

/dev/media0

- Use

v4l2-ctl -Dto find information about the Video Capture devices listed before. In this case, just the/dev/video2and/dev/video3. As you are able to see, the camera is represented by/dev/video3.

## v4l2-ctl --device /dev/video3 -D

Driver Info:

Driver name : uvcvideo

Card type : Metadata 5

Bus info : usb-xhci-cdns3-1.2

Driver version : 5.4.161

Capabilities : 0x84a00001

Video Capture

Metadata Capture

Streaming

Extended Pix Format

Device Capabilities

Device Caps : 0x04a00000

Metadata Capture

Streaming

Extended Pix Format

Media Driver Info:

Driver name : uvcvideo

Model : USB 2.0 Camera: USB Camera

Serial : 01.00.00

Bus info : usb-xhci-cdns3-1.2

Media version : 5.4.161

Hardware revision: 0x00000003 (3)

Driver version : 5.4.161

Interface Info:

ID : 0x03000005

Type : V4L Video

Entity Info:

ID : 0x00000004 (4)

Name : Metadata 5

Function : V4L2 I/O

- With the right video device, from now on, when you start the container you just need to enable access for the specific camera device (in this case

/dev/video3).

Launching the Gstreamer and Video4Linux2 Container

The next step is to launch the container with Gstreamer and Video4Linux2, making sure to pass the right video device /dev/video* under the parameter --device.

# docker run --rm -it -v /tmp:/tmp -v /var/run/dbus:/var/run/dbus -v /dev:/dev -v /sys:/sys \

--device /dev/<video-device> \

--device-cgroup-rule='c 226:* rmw' --device-cgroup-rule='c 199:* rmw' \

<your-dockerhub-username>/<Dockerfile-name>

Creating a Gstreamer pipeline

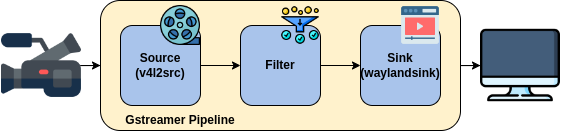

Once inside the container, you are able to use Video4Linux2 and Gstreamer resources. The basic structure of the pipeline relies on the usage of a data source (in this case a Video4Linux2 source), a filter, and a data sink, in this case, the video sink Wayland.

One of the simplest pipelines for showing video can be done the following way:

## gst-launch-1.0 <videosrc> ! <capsfilter> ! <videosink>

Or more specifically

## gst-launch-1.0 v4l2src device=</dev/video*> ! <capsfilter> ! fpsdisplaysink video-sink=waylandsink

If you want to learn more about how to elaborate a more complex Gstreamer pipeline, refer to How to use Gstreamer on Torizon OS.

To discover more properties of v4l2src to configure the pads, you can use gst-inspect:

gst-inspect output

gst-inspect-1.0 v4l2src

Factory Details:

Rank primary (256)

Long-name Video (video4linux2) Source

Klass Source/Video

Description Reads frames from a Video4Linux2 device

Author Edgard Lima <edgard.lima@gmail.com>, Stefan Kost <ensonic@users.sf.net>

Plugin Details:

Name video4linux2

Description elements for Video 4 Linux

Filename /usr/lib/gstreamer-1.0/libgstvideo4linux2.so

Version 1.16.2

License LGPL

Source module gst-plugins-good

Binary package GStreamer Good Plug-ins source release

Origin URL Unknown package origin

GObject

+----GInitiallyUnowned

+----GstObject

+----GstElement

+----GstBaseSrc

+----GstPushSrc

+----GstV4l2Src

Implemented Interfaces:

GstURIHandler

GstTuner

GstColorBalance

GstVideoOrientation

Pad Templates:

SRC template: 'src'

Availability: Always

Capabilities:

image/jpeg

video/mpeg

mpegversion: 4

systemstream: false

video/mpeg

mpegversion: { (int)1, (int)2 }

video/mpegts

systemstream: true

video/x-bayer

format: { (string)bggr, (string)gbrg, (string)grbg, (string)rggb }

width: [ 1, 32768 ]

height: [ 1, 32768 ]

framerate: [ 0/1, 2147483647/1 ]

video/x-cavs

video/x-divx

divxversion: [ 3, 6 ]

video/x-dv

systemstream: true

video/x-flash-video

flvversion: 1

video/x-fwht

video/x-h263

variant: itu

video/x-h264

stream-format: { (string)byte-stream, (string)avc }

alignment: au

video/x-h265

stream-format: byte-stream

alignment: au

video/x-pn-realvideo

video/x-pwc1

width: [ 1, 32768 ]

height: [ 1, 32768 ]

framerate: [ 0/1, 2147483647/1 ]

video/x-pwc2

width: [ 1, 32768 ]

height: [ 1, 32768 ]

framerate: [ 0/1, 2147483647/1 ]

video/x-raw

format: { (string)RGB16, (string)BGR, (string)RGB, (string)RGBA, (string)GRAY8, (string)GRAY16_LE, (string)GRAY16_BE, (string)YVU9, (string)YV12, (string)YUY2, (string)YVYU, (string)UYVY, (string)Y42B, (string)Y41B, (string)v308, (string)YUV9, (string)NV12_10LE, (string)NV12_64Z32, (string)NV24, (string)NV61, (string)NV16, (string)NV21, (string)NV12, (string)I420, (string)xRGB, (string)BGRA, (string)BGRx, (string)ARGB, (string)BGR15, (string)RGB15 }

width: [ 1, 32768 ]

height: [ 1, 32768 ]

framerate: [ 0/1, 2147483647/1 ]

video/x-sonix

width: [ 1, 32768 ]

height: [ 1, 32768 ]

framerate: [ 0/1, 2147483647/1 ]

video/x-vp6-flash

video/x-vp8

video/x-vp9

video/x-wmv

wmvversion: 3

video/x-xvid

Element has no clocking capabilities.

URI handling capabilities:

Element can act as source.

Supported URI protocols:

v4l2

Pads:

SRC: 'src'

Pad Template: 'src'

Element Properties:

blocksize : Size in bytes to read per buffer (-1 = default)

flags: readable, writable

Unsigned Integer. Range: 0 - 4294967295 Default: 4096

brightness : Picture brightness, or more precisely, the black level

flags: readable, writable, controllable

Integer. Range: -2147483648 - 2147483647 Default: 0

contrast : Picture contrast or luma gain

flags: readable, writable, controllable

Integer. Range: -2147483648 - 2147483647 Default: 0

device : Device location

flags: readable, writable

String. Default: "/dev/video0"

device-fd : File descriptor of the device

flags: readable

Integer. Range: -1 - 2147483647 Default: -1

device-name : Name of the device

flags: readable

String. Default: null

do-timestamp : Apply current stream time to buffers

flags: readable, writable

Boolean. Default: false

extra-controls : Extra v4l2 controls (CIDs) for the device

flags: readable, writable

Boxed pointer of type "GstStructure"

flags : Device type flags

flags: readable

Flags "GstV4l2DeviceTypeFlags" Default: 0x00000000, "(none)"

(0x00000001): capture - Device supports video capture

(0x00000002): output - Device supports video playback

(0x00000004): overlay - Device supports video overlay

(0x00000010): vbi-capture - Device supports the VBI capture

(0x00000020): vbi-output - Device supports the VBI output

(0x00010000): tuner - Device has a tuner or modulator

(0x00020000): audio - Device has audio inputs or outputs

force-aspect-ratio : When enabled, the pixel aspect ratio will be enforced

flags: readable, writable

Boolean. Default: true

hue : Hue or color balance

flags: readable, writable, controllable

Integer. Range: -2147483648 - 2147483647 Default: 0

io-mode : I/O mode

flags: readable, writable

Enum "GstV4l2IOMode" Default: 0, "auto"

(0): auto - GST_V4L2_IO_AUTO

(1): rw - GST_V4L2_IO_RW

(2): mmap - GST_V4L2_IO_MMAP

(3): userptr - GST_V4L2_IO_USERPTR

(4): dmabuf - GST_V4L2_IO_DMABUF

(5): dmabuf-import - GST_V4L2_IO_DMABUF_IMPORT

name : The name of the object

flags: readable, writable

String. Default: "v4l2src0"

norm : video standard

flags: readable, writable

Enum "V4L2_TV_norms" Default: 0, "none"

(0): none - none

(45056): NTSC - NTSC

(4096): NTSC-M - NTSC-M

(8192): NTSC-M-JP - NTSC-M-JP

(32768): NTSC-M-KR - NTSC-M-KR

(16384): NTSC-443 - NTSC-443

(255): PAL - PAL

(7): PAL-BG - PAL-BG

(1): PAL-B - PAL-B

(2): PAL-B1 - PAL-B1

(4): PAL-G - PAL-G

(8): PAL-H - PAL-H

(16): PAL-I - PAL-I

(224): PAL-DK - PAL-DK

(32): PAL-D - PAL-D

(64): PAL-D1 - PAL-D1

(128): PAL-K - PAL-K

(256): PAL-M - PAL-M

(512): PAL-N - PAL-N

(1024): PAL-Nc - PAL-Nc

(2048): PAL-60 - PAL-60

(16711680): SECAM - SECAM

(65536): SECAM-B - SECAM-B

(262144): SECAM-G - SECAM-G

(524288): SECAM-H - SECAM-H

(3276800): SECAM-DK - SECAM-DK

(131072): SECAM-D - SECAM-D

(1048576): SECAM-K - SECAM-K

(2097152): SECAM-K1 - SECAM-K1

(4194304): SECAM-L - SECAM-L

(8388608): SECAM-Lc - SECAM-Lc

num-buffers : Number of buffers to output before sending EOS (-1 = unlimited)

flags: readable, writable

Integer. Range: -1 - 2147483647 Default: -1

parent : The parent of the object

flags: readable, writable

Object of type "GstObject"

pixel-aspect-ratio : Overwrite the pixel aspect ratio of the device

flags: readable, writable

String. Default: null

saturation : Picture color saturation or chroma gain

flags: readable, writable, controllable

Integer. Range: -2147483648 - 2147483647 Default: 0

typefind : Run typefind before negotiating (deprecated, non-functional)

flags: readable, writable, deprecated

Boolean. Default: false

Element Signals:

"prepare-format" : void user_function (GstElement* object,

gint arg0,

GstCaps* arg1,

gpointer user_data);

As you are dealing with a raw video, you will have to use, in this case, the video/x-raw and configure its properties: format, width, height, and framerate.

## gst-launch-1.0 v4l2src device='/dev/<video-device>' ! "video/x-raw, format=<video-format>, framerate=<framerate>, width=<supported-width>, height=<supported-height>" ! fpsdisplaysink video-sink=waylandsink

You also can check information about the sink using the gst-inspect:

gst-inspect output

# gst-inspect-1.0 waylandsink

Factory Details:

Rank primary + 2 (258)

Long-name wayland video sink

Klass Sink/Video

Description Output to wayland surface

Author Sreerenj Balachandran <sreerenj.balachandran@intel.com>, George Kiagiadakis <george.kiagiadakis@collabora.com>

Plugin Details:

Name waylandsink

Description Wayland Video Sink

Filename /usr/lib/gstreamer-1.0/libgstwaylandsink.so

Version 1.16.2

License LGPL

Source module gst-plugins-bad

Binary package GStreamer Bad Plug-ins source release

Origin URL Unknown package origin

GObject

+----GInitiallyUnowned

+----GstObject

+----GstElement

+----GstBaseSink

+----GstVideoSink

+----GstWaylandSink

Implemented Interfaces:

GstVideoOverlay

GstWaylandVideo

Pad Templates:

SINK template: 'sink'

Availability: Always

Capabilities:

video/x-raw

format: { (string)BGRx, (string)BGRA, (string)RGBx, (string)xBGR, (string)xRGB, (string)RGBA, (string)ABGR, (string)ARGB, (string)RGB, (string)BGR, (string)RGB16, (string)BGR16, (string)YUY2, (string)YVYU, (string)UYVY, (string)AYUV, (string)NV12, (string)NV21, (string)NV16, (string)YUV9, (string)YVU9, (string)Y41B, (string)I420, (string)YV12, (string)Y42B, (string)v308 }

width: [ 1, 2147483647 ]

height: [ 1, 2147483647 ]

framerate: [ 0/1, 2147483647/1 ]

video/x-raw(memory:DMABuf)

format: { (string)BGRx, (string)BGRA, (string)RGBx, (string)xBGR, (string)xRGB, (string)RGBA, (string)ABGR, (string)ARGB, (string)RGB, (string)BGR, (string)RGB16, (string)BGR16, (string)YUY2, (string)YVYU, (string)UYVY, (string)AYUV, (string)NV12, (string)NV21, (string)NV16, (string)YUV9, (string)YVU9, (string)Y41B, (string)I420, (string)YV12, (string)Y42B, (string)v308 }

width: [ 1, 2147483647 ]

height: [ 1, 2147483647 ]

framerate: [ 0/1, 2147483647/1 ]

Element has no clocking capabilities.

Element has no URI handling capabilities.

Pads:

SINK: 'sink'

Pad Template: 'sink'

Element Properties:

alpha : Wayland surface alpha value, apply custom alpha value to wayland surface

flags: readable, writable

Float. Range: 0 - 1 Default: 0

async : Go asynchronously to PAUSED

flags: readable, writable

Boolean. Default: true

blocksize : Size in bytes to pull per buffer (0 = default)

flags: readable, writable

Unsigned Integer. Range: 0 - 4294967295 Default: 4096

display : Wayland display name to connect to, if not supplied via the GstContext

flags: readable, writable

String. Default: null

enable-last-sample : Enable the last-sample property

flags: readable, writable

Boolean. Default: true

enable-tile : When enabled, the sink propose VSI tile modifier to VPU

flags: readable, writable

Boolean. Default: false

fullscreen : Whether the surface should be made fullscreen

flags: readable, writable

Boolean. Default: false

last-sample : The last sample received in the sink

flags: readable

Boxed pointer of type "GstSample"

max-bitrate : The maximum bits per second to render (0 = disabled)

flags: readable, writable

Unsigned Integer64. Range: 0 - 18446744073709551615 Default: 0

max-lateness : Maximum number of nanoseconds that a buffer can be late before it is dropped (-1 unlimited)

flags: readable, writable

Integer64. Range: -1 - 9223372036854775807 Default: 5000000

name : The name of the object

flags: readable, writable

String. Default: "waylandsink0"

parent : The parent of the object

flags: readable, writable

Object of type "GstObject"

processing-deadline : Maximum processing deadline in nanoseconds

flags: readable, writable

Unsigned Integer64. Range: 0 - 18446744073709551615 Default: 15000000

qos : Generate Quality-of-Service events upstream

flags: readable, writable

Boolean. Default: true

render-delay : Additional render delay of the sink in nanoseconds

flags: readable, writable

Unsigned Integer64. Range: 0 - 18446744073709551615 Default: 0

show-preroll-frame : Whether to render video frames during preroll

flags: readable, writable

Boolean. Default: true

sync : Sync on the clock

flags: readable, writable

Boolean. Default: true

throttle-time : The time to keep between rendered buffers (0 = disabled)

flags: readable, writable

Unsigned Integer64. Range: 0 - 18446744073709551615 Default: 0

ts-offset : Timestamp offset in nanoseconds

flags: readable, writable

Integer64. Range: -9223372036854775808 - 9223372036854775807 Default: 0

window-height : Wayland sink preferred window height in pixel

flags: readable, writable

Integer. Range: -1 - 2147483647 Default: -1

window-width : Wayland sink preferred window width in pixel

flags: readable, writable

Integer. Range: -1 - 2147483647 Default: -1

You have to keep in mind that different cameras may support different video formats, resolutions (height and width), etc. Then, even the simplest pipeline can vary

So, the final structure of the pipeline should be similar to the following one:

## gst-launch-1.0 v4l2src device='/dev/<video-device>' ! "video/x-raw, format=<video-format>, framerate=<framerate>, width=<supported-width>, height=<supported-height>" ! fpsdisplaysink video-sink=waylandsink text-overlay=<true-or-false> sync=<true-or-false>

The following subsections cover the specific considerations of the described process to use MIPI CSI-2 cameras and Webcams.

USB Camera

For information on how this interface is supported across different modules, see Camera on Toradex Computer on Modules

The following pipeline was used to show video from a webcam:

## gst-launch-1.0 v4l2src device='/dev/video3' ! "video/x-raw, format=YUY2, framerate=5/1, width=640, height=480" ! fpsdisplaysink video-sink=waylandsink text-overlay=false sync=false

MIPI CSI-2 Camera

To use a MIPI-CSI Camera you have to make sure that your module and carrier board have a MIPI-CSI connection. For information on how this interface is supported across different modules, see Camera on Toradex Computer on Modules:

Depending on the MIPI CSI-2 Camera you are using, you may enable a specific device tree overlay. As in this example, it was used the Camera Set 5MP AR0521 Color, the overlay process using TorizonCore Builder was described at First Steps with CSI Camera Set 5MP AR0521 Color (Torizon).

With the overlay enabled, the next step is to launch a video stream using a pipeline. To achieve this, you should follow a process similar to the one described in the article First Steps with CSI Camera Set 5MP AR0521 Color (Torizon).

The following pipeline was used to show video with Camera Set 5MP AR0521 Color:

## gst-launch-1.0 v4l2src device='/dev/video0' ! "video/x-raw, format=RGB16, framerate=30/1, width=1920, height=1080" ! fpsdisplaysink video-sink=waylandsink text-overlay=false sync=false

Hello world with Python and OpenCV

This hello-world application, like the usage of Gstreamer directly on the terminal, is just a video stream. For this application you are going to use OpenCV together with V4L2 and GStreamer, using Visual Studio Code Extension for Torizon. If you want to learn more about OpenCV, refer to Torizon Sample: Using OpenCV for Computer Vision.

So, the first step is to create a python application with QML, as described at Python development on Torizon OS. We’re not going to use QML, we just need the GUI application launching Weston.

Then, go to Torizon Extension and proceed with the described modifications. Add the following volumes under the parameter volumes:

- key =

/dev, value=/dev - key =

/tmp, value=/tmp - Key =

/sys, value=/sys - key =

/var/run/dbus, value=/var/run/dbus

Add access to the camera device (/dev/video2) under the parameter devices. Add a a cgroup rule under the extraparms parameter as follows: key=device_cgroup_rules and value=[ "c 199:* rmw", "c 226:* rmw" ]

Finally, modify the field extrapackages including all the packages and plugins explained before, and add the OpenCV package for python3, which is python3-opencv.

python3-opencv v4l-utils gstreamer1.0-qt5 libgstreamer1.0-0 gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly gstreamer1.0-libav gstreamer1.0-doc gstreamer1.0-tools gstreamer1.0-x gstreamer1.0-alsa gstreamer1.0-gl gstreamer1.0-gtk3 gstreamer1.0-pulseaudio

The code must be written inside the main.py and begin with the import of the OpenCV library, called cv2

import cv2

OpenCV provides the VideoCapture class used to capture the video. A pipeline similar to the one created before is going to be the input parameter of the class. The difference between the pipelines is the use of appsink instead of waylandsink, as the pipeline will send data to your program, not to a display.

cap = cv2.VideoCapture("v4l2src device=/dev/video2 ! video/x-raw, width=640, height=480, format=YUY2 ! videoconvert ! video/x-raw, format=BGR ! appsink")

To capture and read the frames we’re going to use read:

ret, frame = cap.read() # Capture frame

And to display the frames we can use imshow:

cv2.imshow('frame', frame) # Display the frames

Then, release the capture and destroy the window:

cap.release() # Release the capture object

cv2.destroyAllWindows() # Destroy all the windows

To read frames and display them continuously, you can put the capture and display lines inside a loop.