How to Execute Models Tuned by SageMaker Neo using DLR Runtime, Gstreamer and OpenCV on TorizonCore

Introduction

DLR is a compact runtime for Machine Learning Models. This runtime is used to run models compiled and tuned by Amazon SageMaker Neo.

In this article, we will show how to run Object Detection algorithms optimized by SageMaker Neo in an Apalis iMX8 board. We will also show how you can obtain frame data coming from a camera from a GStreamer pipeline, modify it with OpenCV and output to a display using a second GStreamer pipeline.

This sample does not support hardware acceleration.

Pre-requisites

Hardware

- An Apalis iMX8 with Torizon installed, as explained on the Quickstart Guide.

- A camera connected to the USB or MIPI CSI-2 interface.

- An HDMI monitor plugged into the carrier board.

Software and Knowledge

- Basic Docker and Torizon knowledge, by following the Quickstart Guide.

- Configure Build Environment for Torizon Containers.

- Awareness about TorizonCore Containers Tags and Versioning.

Running a demo with Object Detection

Toradex provides a simple demo for Object Detection using DLR runtime executing an SSD Object Detection algorithm based on ImageNet.

To execute this demo in your Apalis iMX8, with Torizon installed, first start the Weston container. Execute the following command in your board terminal:

Please, note that by executing the following line you are accepting the NXP's terms and conditions of the End-User License Agreement (EULA)

# docker run -e ACCEPT_FSL_EULA=1 -d --rm --name=weston --net=host --cap-add CAP_SYS_TTY_CONFIG \

-v /dev:/dev -v /tmp:/tmp -v /run/udev/:/run/udev/ \

--device-cgroup-rule='c 4:* rmw' --device-cgroup-rule='c 13:* rmw' --device-cgroup-rule='c 199:* rmw' --device-cgroup-rule='c 226:* rmw' \

torizon/weston-vivante:$CT_TAG_WESTON_VIVANTE --developer --tty=/dev/tty7

After Weston start, run the demo image from dockerhub on the board:

Please, note that by executing the following line you are accepting the NXP's terms and conditions of the End-User License Agreement (EULA)

docker run -e ACCEPT_FSL_EULA=1 --rm -d --name=dlr-example --device-cgroup-rule='c 81:* rmw' -v /dev/galcore:/dev/galcore -v /run/udev/:/run/udev/ -v /sys:/sys -v /dev:/dev -v /tmp:/tmp --network host torizonextras/arm64v8-sample-dlr-gstreamer-vivante:${CT_TAG_WAYLAND_BASE_VIVANTE}

Learn more about the tag CT_TAG_WAYLAND_BASE_VIVANTE on TorizonCore Containers Tags and Versioning. Check the available tags for this sample on https://hub.docker.com/r/torizonextras/arm64v8-sample-dlr-gstreamer-vivante/tags.

This demo will get frames of a camera in /dev/video0, execute the object detection algorithm inference and output to a monitor using the Wayland protocol. Update inference.py file accordingly if necessary.

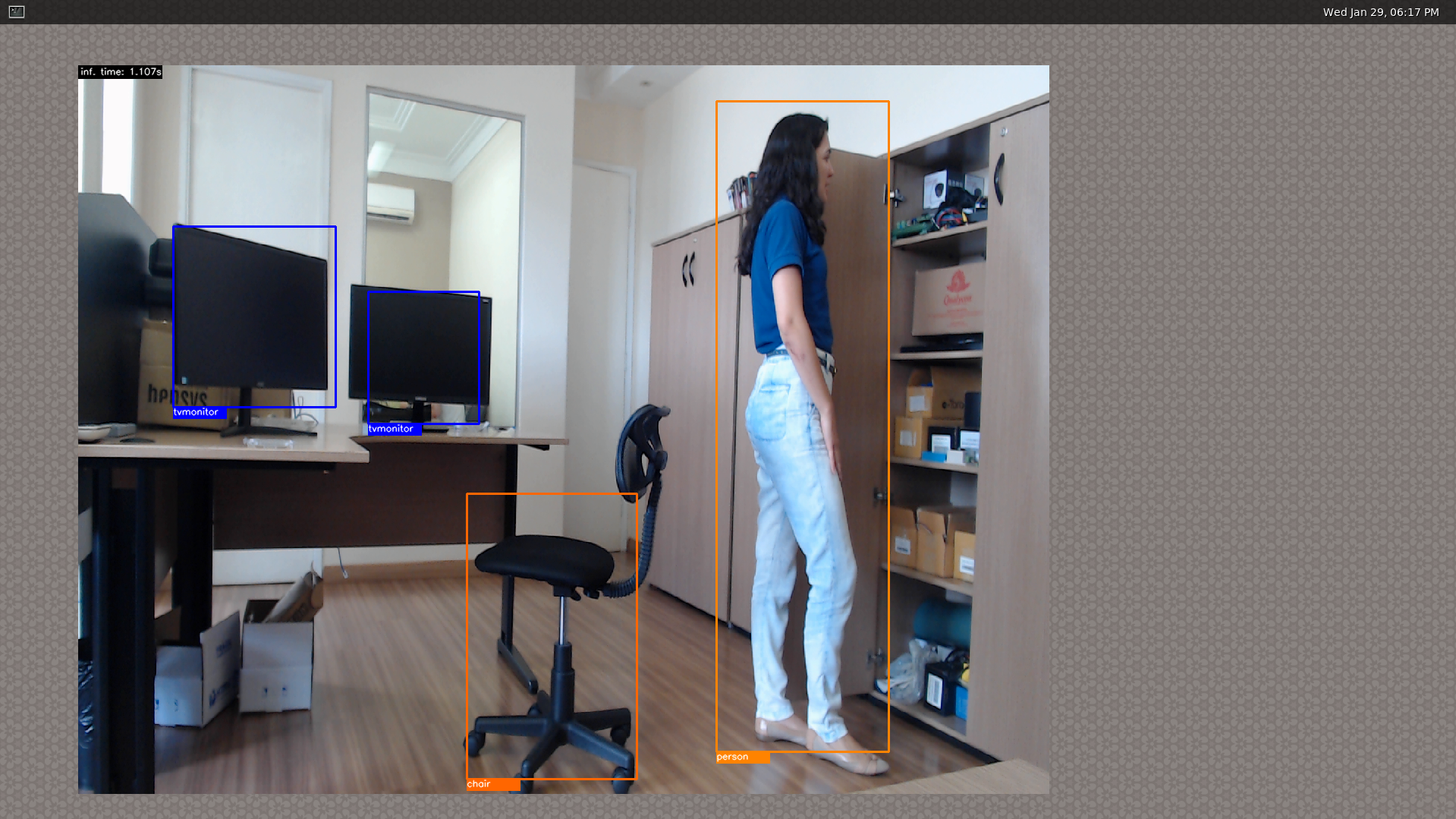

The example files contains a generic model for object detection, trained with ImageNet dataset , with the following object tags categories: 'aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor'.

To stop the containers:

# docker stop weston;docker stop dlr-example

Modifying the demo to use your own model

Get the source code

First, clone the torizon sample files from the repository. These files are the base for this article.

$ git clone https://github.com/toradex/torizon-samples.git

Change to the project's directory.

$ cd torizon-samples/dlr-gstreamer

This is the structure of the project's directory:

$ tree

.

├── Dockerfile

├── inference.py

├── model

│ ├── model.json

│ ├── model.params

│ └── model.so

└── README.txt

Dockerfile

The example's Dockerfile uses torizon/wayland-base-vivante image as starting point. The torizon/wayland-base-vivante image contains components used for image processing: The Vivante's GPU drivers and Wayland. For this image, we install OpenCV, GStreamer, NumPy, and also the DLR runtime.

The inference.py file

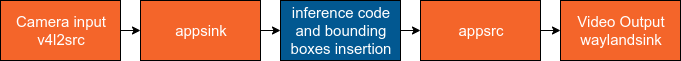

The project's Python script executes the application. It uses GStreamer's Python API to collect frames in RGB format, process it, and output the video.

Creating the GStreamer pipeline

We will use appsink GStreamer's element to collect frames in RGB format coming from the camera. It's counterpart - appsrc will put the processed frames into a second bus responsible for the image output.

The frames are then processed and used as input of the neural network. The following code snippet shows how the pipeline is created. In this example, we set the function on_new_frame to handle data when a new frame is available on appsink.

# GStreamer Init

Gst.init(None)

pipeline1_cmd="v4l2src device=/dev/video2 do-timestamp=True ! queue leaky=downstream ! videoconvert ! \

videoscale n-threads=4 method=nearest-neighbour ! \

video/x-raw,format=RGB,width="+str(width_out)+",height="+str(height_out)+" ! \

queue leaky=downstream ! appsink name=sink \

drop=True max-buffers=1 emit-signals=True max-lateness=8000000000"

pipeline2_cmd = "appsrc name=appsource1 is-live=True block=True ! \

video/x-raw,format=RGB,width="+str(width_out)+",height="+ \

str(height_out)+",framerate=10/1,interlace-mode=(string)progressive ! \

videoconvert ! waylandsink" #v4l2sink max-lateness=8000000000 device=/dev/video14"

pipeline1 = Gst.parse_launch(pipeline1_cmd)

appsink = pipeline1.get_by_name('sink')

appsink.connect("new-sample", on_new_frame, appsink)

pipeline2 = Gst.parse_launch(pipeline2_cmd)

appsource = pipeline2.get_by_name('appsource1')

pipeline1.set_state(Gst.State.PLAYING)

bus1 = pipeline1.get_bus()

pipeline2.set_state(Gst.State.PLAYING)

bus2 = pipeline2.get_bus()

Using DLR to run models tuned by AWS SageMaker Neo

To use DLR using python, the first step is to import the DLR runtime API. Look at the example's Dockerfile if you need to see how it is installed.

To import the DLR python API:

from dlr import DLRModel

On the script initialization, we need to set a DLR runtime model by indicating where our model files are located.

model = DLRModel('./model', 'cpu')

The run function of DLR will process the neural network.

outputs = model.run({'data': nn_input})

objects=outputs[0][0]

scores=outputs[1][0]

bounding_boxes=outputs[2][0]

In this example, nn_input is the neural network input - A NumPy array with the shape 240x240 containing the frame in RGB format.

The return of the run function is the output of the neural network with arrays containing the object categories detected, it's score and the corresponding bounding boxes.

The output data is processed by the script and OpenCV functions are used to draw the bounding boxes in the frame. In this demo, only the objects with score > 0.5 will be displayed.

OpenCV is used to draw bounding boxes:

#Draw bounding boxes

i = 0

while (scores[i]>0.5):

y1=int((bounding_boxes[i][1]-nn_input_size/8)*width_out/nn_input_size)

x1=int((bounding_boxes[i][0])*height_out/(nn_input_size*3/4))

y2=int((bounding_boxes[i][3]-nn_input_size/8)*width_out/nn_input_size)

x2=int((bounding_boxes[i][2])*height_out/(nn_input_size*3/4))

object_id=int(objects[i])

cv2.rectangle(img,(x2,y2),(x1,y1),colors[object_id%len(colors)],2)

cv2.rectangle(img,(x1+70,y2+15),(x1,y2),colors[object_id%len(colors)],cv2.FILLED)

cv2.putText(img,class_names[object_id],(x1,y2+10), cv2.FONT_HERSHEY_SIMPLEX, 0.4,(255,255,255),1,cv2.LINE_AA)

i=i+1

cv2.rectangle(img,(110,17),(0,0),(0,0,0),cv2.FILLED)

cv2.putText(img,"inf. time: %.3fs"%last_inference_time,(3,12), cv2.FONT_HERSHEY_SIMPLEX, 0.4,(255,255,255),1,cv2.LINE_AA)

The model folder

As explained earlier, the example contains model files trained with the ImageNet dataset.

You can replace the existing content of this folder by models trained with your own datasets, by replacing the files in this directory. For more information about how to train your own model, visit the Training your own model page

Building project

On the host PC,cd to the project's directory and build the image:

$ cd <Dockerfile-directory>

$ docker build -t <your-dockerhub-username>/dlr-example --cache-from torizonextras/dlr-example .

After the build, push the image to your Dockerhub account:

$ docker push <your-dockerhub-username>/dlr-example

Running project

This example will run a pipeline that uses waylandsink GStreamer's plugin. This plugin runs on top of Weston. It will be necessary to start 2 containers: One with the Weston image, and one with the application image with Wayland support. Both will communicate through shared directories.

First, start the container with the Weston. In the terminal of your board:

Please, note that by executing the following line you are accepting the NXP's terms and conditions of the End-User License Agreement (EULA)

# docker run -e ACCEPT_FSL_EULA=1 -d --rm --name=weston --net=host --cap-add CAP_SYS_TTY_CONFIG -v /dev:/dev -v /tmp:/tmp -v /run/udev/:/run/udev/ --device-cgroup-rule='c 4:* rmw' --device-cgroup-rule='c 13:* rmw' --device-cgroup-rule='c 199:* rmw' --device-cgroup-rule='c 226:* rmw' torizon/weston-vivante:$CT_TAG_WESTON_VIVANTE --developer --tty=/dev/tty7

You will see the Weston screen in your HDMI monitor.

After Weston start, pull your application from your dockerhub account to the board.

# docker pull <your-dockerhub-username>/dlr-example

After the pull, run a container based on the image.

Please, note that by executing the following line you are accepting the NXP's terms and conditions of the End-User License Agreement (EULA)

docker run -e ACCEPT_FSL_EULA=1 --rm -d --name=dlr-example --device-cgroup-rule='c 81:* rmw' -v /dev/galcore:/dev/galcore -v /run/udev/:/run/udev/ -v /sys:/sys -v /dev:/dev -v /tmp:/tmp --network host <your-dockerhub-username>/dlr-example

The inference should be displayed on the screen.

To Stop the Application and Weston

# docker stop dlr-example

# docker stop weston