Torizon Sample: Real Time Object Detection with Tensorflow Lite

Introduction

TensorFlow is a popular open-source platform for machine learning.Tensorflow Lite is a set of tools to convert and run Tensorflow models on embedded devices.

Through Torizon, Toradex provides Debian Docker images and deb packages that greatly ease the development process for several embedded computing applications. In this article, we will show how you can run an application with Tensorflow Lite using Python in supported platforms.

If you want to learn more about machine learning using Toradex modules, see the article Building Machine Learning Software with Reference Images for Yocto Project. To find out more about the Machine Learning libraries available and NPU usage on i.MX8-based modules, see the i.MX Machine Learning User's Guide

This article complies with the Typographic Conventions for Torizon Documentation.

Prerequisites

- A supported Toradex SoM with Torizon OS installed, as explained on the Quickstart Guide.

- A camera and basic knowledge of How to use Cameras on Torizon.

- Configure Build Environment for Torizon Containers on your Linux development PC.

Supported platforms

This sample is only validated to work on the following platforms:

| Module | NPU Support | GPU Support | CPU Support[1] |

|---|---|---|---|

| Colibri iMX8QX Plus | N/A | ✅[2] | ✅ |

| Apalis iMX8 Quad Plus | N/A | ✅ | ✅ |

| Apalis iMX8 Quad Max | N/A | ✅ | ✅ |

| Verdin iMX8M Plus | ✅[3] | ✅ | ✅ |

- [1]: CPU inference is not recommended, as the performance is not good.

- [2]: GPU inference with the Colibri iMX8QX Plus is not recommended for this sample, as the performance is poor. Lighter machine learning models can be ran using the provided container.

- [3]: Not all Verdin iMX8MP modules have an NPU. To identify which ones have, please refer to Verdin iMX8MP Table Comparison.

About this Sample Project

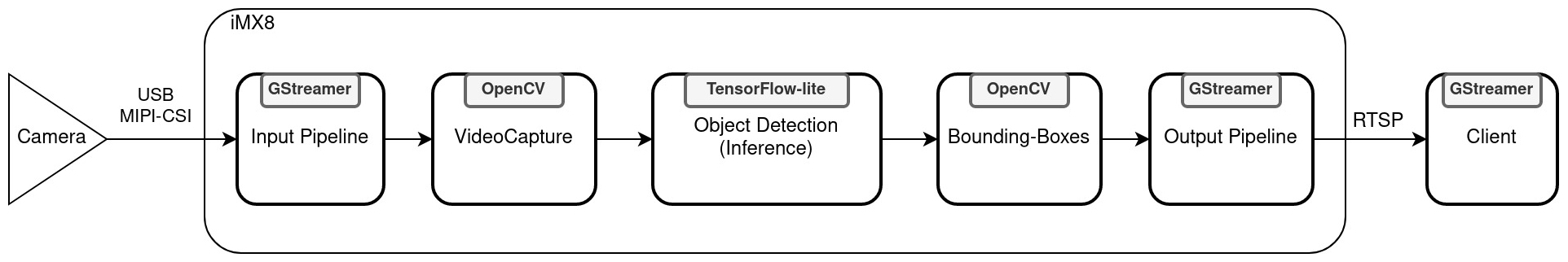

This example uses Tensorflow Lite 2.8.0 with Python. It executes a custom demo that captures video from a connected camera, runs object detection on the captured frames and streams the output via RTSP using GStreamer. This is summarized in the following diagram:

To enable hardware acceleration, the external delegate tensorflow-lite-vx-delegate is used.

Build the Sample Project

This sample takes a long time to build, depending on your build machine it can take over two hours.

If you just want to run the sample, you can skip to Run the Sample Project.

Get the Torizon Samples Source Code

To obtain the files, clone the torizon-samples repository to your computer:

$ cd ~

$ git clone -b bookworm https://github.com/toradex/torizon-samples.git

Build the Sample

If you have not set up your build environment for Torizon, follow the steps in Enable Arm emulation.

After ARM emulation is configured build the sample project:

$ cd ~/torizon-samples/tflite/tflite-rtsp

$ docker build -t <your-dockerhub-username>/tflite-rtsp .

After the build, push the image to your Dockerhub account:

$ docker push <your-dockerhub-username>/tflite-rtsp

Run the Sample Project

Find your Camera

Before running the sample, you need to find the camera that will be used as a capture device. To do this, follow the steps described in Discovering the Video Capture Device from the article How to use Cameras on Torizon.

Runtime Environment Variables

There are several environment variables that are intended to be user-customizable that can be passed to the docker-run command. Their function and possible values are shown in the following table:

| Variable | Default Value | Possible Values | Function |

|---|---|---|---|

| CAPTURE_DEVICE | /dev/video0 | /dev/videoX | Contains the video capture device, to be opened with GStreamer's v4l2src. |

| USE_HW_ACCELERATED_INFERENCE | 1 | 0 | 1 | Enables the use of the external delegate tensorflow-lite-vx-delegate. For the iMX8MP, this means using the NPU. For iMX8QM, iMX8QP and iMX8X, the GPU. |

| USE_GPU_INFERENCE | 0 | 0 | 1 | Enables GPU inference, even on the iMX8MP. This variable has no effect if USE_HW_ACCELERATED_INFERENCE is set to 0. |

| MINIMUM_SCORE | 0.55 | 0.0 - 1.0 | Minimum score of a detected object to put a bounding box over. |

| CAPTURE_RESOLUTION_X | 640 | 1 - 2147483647 | Horizontal capture resolution. May need to be changed depending on the supported formats of the capture device. |

| CAPTURE_RESOLUTION_Y | 480 | 1 - 2147483647 | Vertical capture resolution. May need to be changed depending on the supported formats of the capture device. |

| CAPTURE_FRAMERATE | 30 | 1 - 2147483647 | Capture and stream framerate. May need to be changed depending on the supported formats of the capture device. |

| STREAM_BITRATE | 2048 | 1 - 2048000 | Stream bitrate. |

Run command

Enter your module's terminal using SSH.

Launch the sample application by using the following command, make sure to change /dev/video0 to the correct captured device for your setup.

# docker run -it --rm -p 8554:8554 \

-v /dev:/dev \

-v /tmp:/tmp \

-v /run/udev/:/run/udev/ \

--device-cgroup-rule='c 4:* rmw' \

--device-cgroup-rule='c 13:* rmw' \

--device-cgroup-rule='c 199:* rmw' \

--device-cgroup-rule='c 226:* rmw' \

--device-cgroup-rule='c 81:* rmw' \

-e ACCEPT_FSL_EULA=1 \

-e CAPTURE_DEVICE=/dev/video0 \

-e USE_HW_ACCELERATED_INFERENCE=1 \

-e USE_GPU_INFERENCE=0 \

--name tflite-rtsp <image-tag>

Toradex provides pre-built images for this sample, just replace <image-tag> in the run command with torizonextras/arm64v8-sample-tflite-rtsp:${CT_TAG_DEBIAN}.

Run the RTSP client

On your development machine, make sure to have GStreamer installed. If you need to install it, refer to Installing GStreamer.

To view the output of this sample, run the following command:

$ gst-launch-1.0 rtspsrc location=rtsp://<module-ip>:8554/inference ! decodebin ! xvimagesink sync=false

$ gst-launch-1.0 rtspsrc location=rtsp://<module-ip>:8554/inference ! decodebin ! waylandsink sync=false

The generic approach has a higher delay, it is only recommended as a test to see if your setup is working.

$ gst-launch-1.0 rtspsrc location=rtsp://<module-ip>:8554/inference ! decodebin ! autovideosink