Add Packages, Libraries, Tools and Files

Introduction

In this article, you will learn how to add external dependencies, libraries, tools and files to an existing single-container project created from one of the Torizon IDE Extension templates.

It includes instructions on modifying the torizonPackages.json file and gives a general direction for those who want to manually add packages, libraries, tools and files to Dockerfile, Dockerfile.debug, and Dockerfile.sdk.

Why Add Dependencies and Tools to an IDE Extension Project

Your application may require external libraries and utilities, which can be provided by your team or installed via Linux package managers. Torizon OS uses containers to package software resources. Hence, application dependencies are added to containers instead of the OS image. That offers isolation from your host machine, portability, reproducibility, and ease of application deployment.

Prerequisites

- Basic understanding of the Torizon IDE Extension.

- Toradex System on Module (SoM) with Torizon OS installed.

- Create a Single-Container Project.

The instructions provided in this article work for both Linux and Windows environments.

Base Container Images of Templates

The base container images of all Torizon IDE Extension templates are the Torizon Debian container images.

You can check which are the base container images of the template on the FROM part of the Dockerfiles. This one for example is the base image of the Dockerfile.debug on the C++ CMake template:

[...]

ARG BASE_VERSION=4

[...]

FROM --platform=linux/${IMAGE_ARCH} \

torizon/debian:${BASE_VERSION} AS debug

[...]

For Torizon-supported templates the base container images are the torizon/ ones.

Add Debian Packages

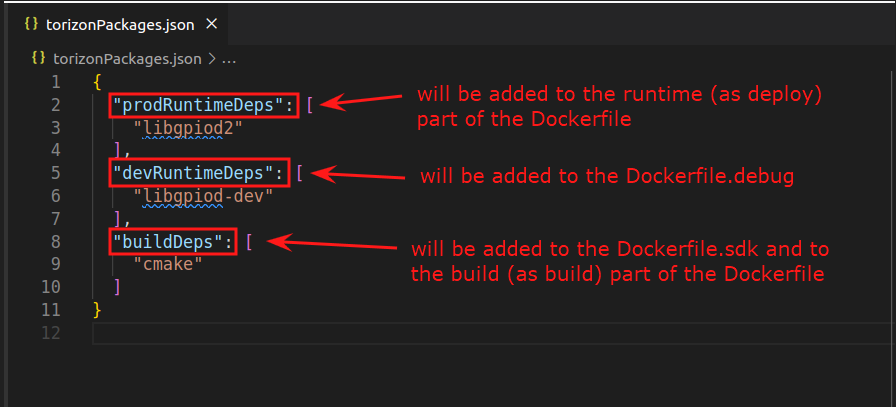

The Torizon IDE extension templates use Debian-based containers. These templates include the torizonPackages.json file, to which you can add packages available in both Debian and Toradex feeds. When building a project, packages specified in the torizonPackages.json file are automatically added to Dockerfile, Dockerfile.debug, and Dockerfile.sdk, according to the following rules:

- Packages in the

devRuntimeDepsarray are added to the runtime development/debug container image, onDockerfile.debugfor the debug/development image. - Packages in the

prodRuntimeDepsarray are added to the runtime production/release container image, on the runtime part (defined withas Deployin the end, in the cases where there is also a building part) of theDockerfilefor the production/release image. - If there are building/compilation container images, packages in the

buildDepsarray are added to this images; onDockerfile.sdkfor the debug/development image (which is the building part for debug/development) and on the building part (defined withas buildin the end) of theDockerfilefor the production/release image.

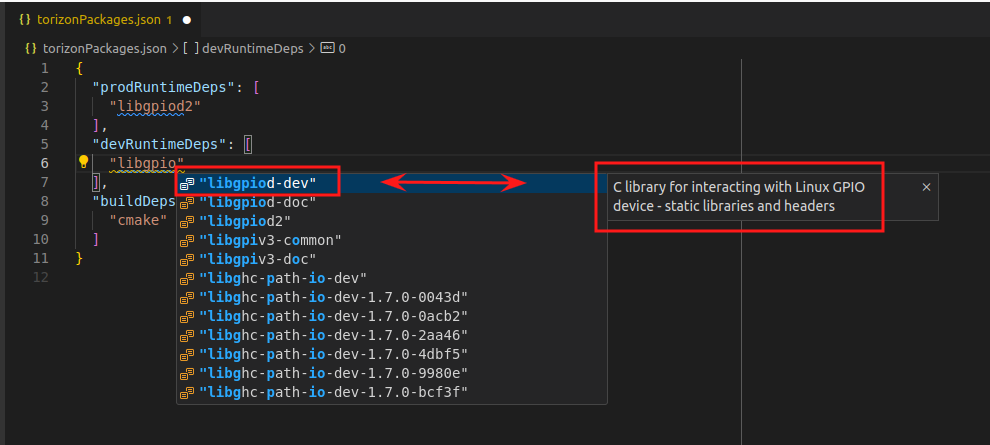

1.Choose the package: The IDE Extension supports autocompletion of Debian and Toradex feed packages in the torizonPackages.json file.

To trigger it, press Ctrl + Space inside the array, wait for it to load, and then start typing the package name:

- Check the package description: Below the list of packages, the description of the selected package is displayed:

If the description does not appear, press Ctrl + Space again, as this key binding is a VS Code shortcut that triggers the autocomplete suggestion.

Additionally, you can also configure the autocompletion feature.

Configure the Autocompletion Feature

Configure the Autocompletion Feature

By default, the autocomplete feature in torizonPackages.json searches for packages from the Debian bookworm release.

To select a different Debian release, modify the torizon.debianRelease setting to one of the following values:

bookwormbullseyestabletestingsid

Note that this configuration only affects the autocomplete feature and not the Debian release used by container images, which you can alter manually by editing the corresponding Dockerfile.

That setting can be applied globally or to a single project.

Globally:

- Open the VS Code command palette (press

F1). - Select

Preferences: Open Settings (JSON). - Add a line similar to the example below:

"torizon.debianRelease": "bullseye"

Project:

- Go to the

.vscode/settings.jsonfile, located in the project directory. - Add the

torizon.debianReleasesetting and the desired Debian release. The project setting overwrites the global one.

Dockefiles: The apply-torizon-packages task, which is automatically triggered whenever another task builds a container image, uses the snippets below to append packages from torizonPackages.json:

- Runtime part (as deploy) of Dockerfile (

prodRuntimeDeps):

RUN apt-get -y update && apt-get install -y --no-install-recommends \

# ADD YOUR PACKAGES HERE

# DO NOT REMOVE THIS LABEL: this is used for VS Code automation

# __torizon_packages_prod_start__

# __torizon_packages_prod_end__

# DO NOT REMOVE THIS LABEL: this is used for VS Code automation

&& apt-get clean && apt-get autoremove && rm -rf /var/lib/apt/lists/*

- Dockerfile.debug (

devRuntimeDeps). The build part on debugging is theDockerfile.sdkimage:

# automate for torizonPackages.json

RUN apt-get -q -y update && \

apt-get -q -y install \

# DO NOT REMOVE THIS LABEL: this is used for VS Code automation

# __torizon_packages_dev_start__

# __torizon_packages_dev_end__

# DO NOT REMOVE THIS LABEL: this is used for VS Code automation

&& \

apt-get clean && apt-get autoremove && \

rm -rf /var/lib/apt/lists/*

- Build part of Dockerfile (as build) and Dockerfile.sdk (

buildDeps):

# automate for torizonPackages.json

RUN apt-get -q -y update && \

apt-get -q -y install \

# DO NOT REMOVE THIS LABEL: this is used for VS Code automation

# __torizon_packages_build_start__

# __torizon_packages_build_end__

# DO NOT REMOVE THIS LABEL: this is used for VS Code automation

&& \

apt-get clean && apt-get autoremove && \

rm -rf /var/lib/apt/lists/*

Manual

You can manually add packages by directly editing Dockerfile, Dockerfile.debug and Dockerfile.sdk.But this is not reccommended. The IDE Extension will not be able to manage the packages added manually on the different files.

Details

[...]

RUN apt-get -q -y update && \

apt-get -q -y install \

libgpiod-dev:arm64 \

libasound2-dev:arm64 && \

apt-get clean && apt-get autoremove && rm -rf /var/lib/apt/lists/*

[...]

When manually adding packages to Dockerfile.sdk, include the SoM architecture tag after each entry (e.g., libgpiod-dev:armhf). Otherwise, Docker will install packages compatible with the host machine architecture (usually x64).

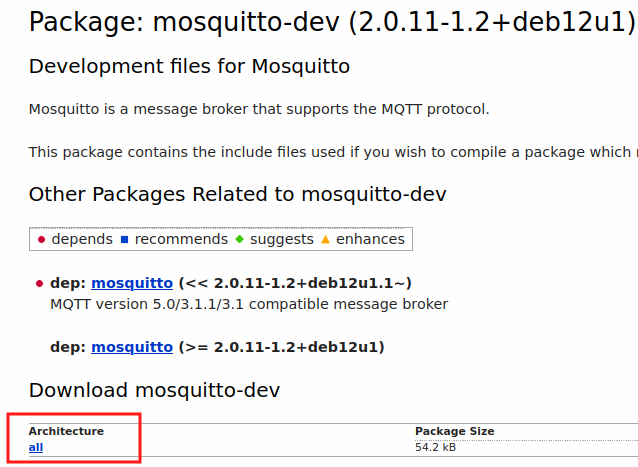

Also, there are certain Debian packages, like the mosquitto-dev for example, that have just an all architecture. In this case, you should add it like mosquitto-dev:all or just mosquitto-dev (without the SoM architecture tag).

Microsoft .NET Packages Installation

NuGet

While developing your projects, you may need to add .NET packages using the NuGet package manager. It is a way to reuse community code and streamline your application development.

To add .NET packages, run the following command in the VS Code's terminal:

dotnet add package <PackageName>

For example, our GPIO sample requires the System.Device.Gpio .NET package. To add it, run the following command in the VS Code's terminal:

dotnet add package System.Device.Gpio

Package Dependencies

Sometimes .NET packages require installing C libraries. You can search for package dependencies on the NuGet page or in package documentation. Since applications run inside a container on Torizon, we need to add these packages to the container image. To do so, modify the torizonPackages.json file as described in the Debian Packages Installation section.

Python Pip Packages Installation

While developing your projects, you may need to add Python packages using the pip package manager. It is a way to use software that is not part of the default Python library.

Python packages to be installed by pip are defined in a file named requirements.txt. To learn about this file, see Requirements File Format.

The Toradex Python Template comes with three requirements.txt, each to be used in different development stages.

requirements-local.txt: Add libraries to your host machine. In this case, running the application requires setting up a virtual environment on VS Code.requirements-debug.txt: Add libraries to the debug container image. For more information about this container, see Remote Deploy and Debug Projects.requirements-release.txt: Add libraries to the release container image. For more information about this type of container, see Build, Test and Push Applications for Production.

Compile C / C++ Packages

While developing your projects, you may need to add C/C++ libraries that are not part of the default C/C++ library. The IDE Extension uses Makefile to automate the build and cross-compilation process.

To include external libraries:

- Add the library package to the

torizonPackages.jsonfile. - Link the library to your application: Add it to the LDFLAGS variable of the Makefile. For example:

CC := gcc

CCFLAGS := -Iincludes/

DBGFLAGS := -g

- LDFLAGS :=

+ LDFLAGS := -l<library-1> -l<library-2> -l<library-3>

CCOBJFLAGS := $(CCFLAGS) -c

ARCH :=

Workspace Files And Files Inside Containers

Windows Filesystem and Linux Filesystem

On Windows, having the project workspace (folder) in the Windows filesystem (on /mnt/c/... for example) may lead to unexpected errors and behaviors. The templates are based on Linux containers, that expect Linux filesystem.

So, the project folder must be in the Linux filesystem, inside WSL. The IDE extension validates the path on the creation of the project, and lock the creation if the path is on /mnt/<c/d/e/....

If you want to copy something that is in your Windows filesystem to your project's folder, you can easily access the Linux filesystem files from the Windows side both on Windows File Explorer and on PowerShell, in the ways described in this Microsoft article.

SDK Container

Some projects use a container with the SDK toolchain to cross-compile your code for SoM architectures. The generated build files are stored in the project workspace and used by the debug container.

Since containers run in an isolated environment, the SDK container requires mounting (bind mount) the workspace directory into it. This container is defined by Dockerfile.sdk and executed on the host machine.

For example, the task below is part of the C++ CMake template and runs the cmake --build build-arm command inside the SDK container. That task compiles the code for the ARM64 architecture and stores build files in the build-arm64 workspace directory.

{

"label": "build-debug-arm64",

"detail": "Build a debug version of the application for arm64 using\nthe toolchain from the SDK container.",

"command": "DOCKER_HOST=",

"type": "shell",

"args": [

"docker",

"run",

"--rm",

"-it",

"-v",

"${workspaceFolder}:${config:torizon_app_root}",

"cross-toolchain-arm64-__container__",

"cmake",

"--build",

"build-arm64"

],

"problemMatcher": [

"$gcc"

],

"icon": {

"id": "flame",

"color": "terminal.ansiYellow"

},

"dependsOrder": "sequence",

"dependsOn": [

"build-configure-arm64"

]

},

The flag that performs the bind-mount is -v ${workspaceFolder}:${config:torizon_app_root}. Since the directory is shared between the workspace and the SDK container, build files generated inside the SDK container automatically appear in the workspace.

As a result of bind-mounting, the SDK can access previous builds in the workspace and does not need to build everything from scratch every time.

Debug Container

The debug container is deployed and executed on the target device when debugging applications. This container is defined by Dockerfile.debug.

Since this container is built on the host machine and executed on the SoM, it is not possible to bind-mount the workspace directory.

Instead, build files (generated by the SDK container or framework specific SDK, like .NET CLI) are copied to the debug container trhough SSH. To verify what is copied to the debug container, check the deploy-torizon-<arch> task in the tasks.json file.

Docker push and pull don't have the option to not use compression, to speed up the build and run pipeline on debugging, the build files are copied to the debug container using SSH instead of a COPY Docker instruction.

Example of the deploy-torizon-arm64 task in the tasks.json file:

{

"label": "deploy-torizon-arm64",

"detail": "",

"hide": true,

"command": "rsync",

"type": "process",

"args": [

"-P",

"-av",

"-e",

"ssh -p ${config:torizon_debug_ssh_port} -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null",

"${workspaceFolder}/build-arm64/bin/",

"${config:torizon_run_as}@${config:torizon_ip}:${config:torizon_app_root}"

],

"dependsOn": [

"validate-settings",

"validate-arch-arm64",

"copy-docker-compose",

"pre-cleanup",

"build-debug-arm64",

"build-container-torizon-debug-arm64",

"push-container-torizon-debug-arm64",

"pull-container-torizon-debug-arm64",

"run-container-torizon-debug-arm64"

],

"dependsOrder": "sequence",

"problemMatcher": "$msCompile",

},

The config variables are defined in .vscode/settings.json. For details of each variable check the Workspace - Settings article.

Since only the executable files produced during compilation on the build-arm64/bin subdirectory are needed for the execution of the application, only the build-arm64/bin subdirectory is copied.

Release Container

The release container is the one that should be deployed and executed during production on SoMs. This container is defined by Dockerfile.

When the code is compiled, Dockerfile uses a multi-stage build. That means there is a build and a runtime container:

- The build container runs in the host machine and compiles the code. It can be identified by the

as Buildalias. - The runtime container, like the debug container, is executed on the SoM. It can be identified by the

as Deployalias.

The compiled code is copied from the build to the runtime container through a COPY command as follows:

COPY ${APP_ROOT}/build-${IMAGE_ARCH}/bin ${APP_ROOT}

The multi-stage build guarantees the same environment every time the code is compiled and optimizes the container image.

Adding Files to Containers

The project's workspace becomes available inside the container in different ways.

The SDK container mounts the project's workspace into the container, so no additional configuration is required.

The debug container contains only a copy of the build directory. For other files/directories, add another COPY command to Dockerfile.debug. For example, suppose the scenario below:

- Source: An

assetsdirectory in your workspace. - Target (container): An

assetsdirectory in the application's root directory.

The COPY would be as follows:

COPY ./assets ${APP_ROOT}/assets

The APP_ROOT variable, defined in .vscode/settings.json, sets the directory to which the files are copied. The value for IMAGE-ARCH is automatically set according to the target's architecture. For ARM64 for example the value will be automatically set to IMAGE-ARCH=arm64.

For the debug container, it is also possible to copy this assets directory through rsync, either by adding an rsync task as explained in the Synchronization Task Example with rsync section or by adding it to the task that performs the rsync on the debugging pipeline as shown below:

{

"label": "deploy-torizon-arm64",

"detail": "",

"hide": true,

"command": "rsync",

"type": "process",

"args": [

"-P",

"-av",

"-e",

"ssh -p ${config:torizon_debug_ssh_port} -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null",

"${workspaceFolder}/build-arm64/bin/",

"${workspaceFolder}/assets",

"${config:torizon_run_as}@${config:torizon_ip}:${config:torizon_app_root}"

],

[...]

},

Copying the entire workspace to the container is possible but not recommended. Doing so significantly increases the container image size, affecting the time needed to deploy and execute it.

For the release container, the COPY command is the same as the debug container if the source directory comes from the project's workspace.

If the container uses a multi-stage build, you can copy files/directories from one stage to another. For example, suppose the compilation generates a lib directory you want to copy to the runtime container image. In that case, you would add the following command:

COPY ${APP_ROOT}/build-${IMAGE_ARCH}/lib ${APP_ROOT}/lib

Adding Files Shared Between Projects

If, for example, you have a custom library shared between multiple projects, that library will be in a directory outside of the project's workspace.

Suppose you have two projects at /home/projects/myproject1 and /home/projects/myproject2, and a custom library, located at /home/libraries/mylib, is used by both projects. You would have the following file structure:

home

├── libraries

│ └── mylib

└── projects

├── myproject1

└── myproject2

To access, compile, or debug that library with your project's code, create an extra task at tasks.json. That task should synchronize the library's folder and your project's workspace.

Synchronization Task Example with rsync

To synchronize the /home/libraries/mylib folder and the /home/projects/myproject1/libraries/mylib folder for debugging, follow the steps below:

Create the

rsync-mylibtask attasks.json:tasks.json{

"label": "rsync-lib",

"detail": "",

"hide": true,

"command": "rsync",

"type": "process",

"args": [

"-av",

"/home/libraries/mylib",

"./libraries"

],

"dependsOrder": "sequence",

"problemMatcher": "$msCompile",

"icon": {

"id": "layers",

"color": "terminal.ansiCyan"

}

},Identify the debugger configuration that corresponds to your SoM arch in

launch.json. The snippet below shows theTorizon ARMv8(ARM64) configuration:launch.json{

"name": "Torizon ARMv8",

[...]

"preLaunchTask": "deploy-torizon-arm64"

},Copy the name of the task defined in the

preLaunchTaskattribute: In this example,deploy-torizon-arm64.Add the created task to the

dependsOnattribute of thedeploy-torizon-arm64task as follows:tasks.json"dependsOn": [

"validate-settings",

"validate-arch-arm64",

"copy-docker-compose",

"pre-cleanup",

"rsync-mylib",

"build-debug-arm64",

"build-container-torizon-debug-arm64",

"push-container-torizon-debug-arm64",

"pull-container-torizon-debug-arm64",

"run-container-torizon-debug-arm64"

],

Device Tree Overlays

Uisng the Torizon IDE extension it is also possible to list the available device tree overlays on the board and apply the desired ones. The steps performed by the Torizon IDE extension are the same as the ones this section of the Device Tree Overlays on Torizon article.

Check the video down below to learn how to use it: